Unexpectedly, it can sometimes occur that you notice that your BizTalk DTA database has grown from a small tracking database to a huge database taking up all disk space on your server. I had the same issue at a client, and have noticed that this can have quite some consequences: BizTalk server stopped processing messages, no backups could be taken anymore from the databases, etc. Not really something you want to happen to a critical environment.

Therefore following little guideline that you can use as a precaution, as well as a fix while dealing with a huge DTA database.

1. Make sure you have enough disk space

The DTA database can grow quite large from one moment to another, so its best to take into account a quite large disk where the database will be stored. A DTA database stays, according to Microsoft guidelines, healthy in size until 15GB. Everything above 15GB is considered problematic and needs to be dealt with.

When adding the numbers in terms of size of the DTA db together with the other BizTalk databases, make sure you have around 30GB of disk space allocated for the databases itself. When storing the backups of the databases also on the same disk, take at least 40GB, but take into account that this is the absolute minimum!

2. Enable the DTA Purge and Archive job

The DTA Purge and Archive job in SQL Server will clear completed and failed messages after a certain given time. We make a separation here between 2 situations: a situation where there is no problem yet with the database and one where we’re struggling with a huge DTA database and disk space issues:

No db problem, just precautionary

By default, the DTA Purge and Archive job will call the BackupAndPurge stored procedure. This call to the stored procedure will take some parameters:

exec dtasp_BackupAndPurgeTrackingDatabase

1, --@nLiveHours tinyint, --Any completed instance older than the live hours +live days

0, --@nLiveDays tinyint = 0, --will be deleted along with all associated data

1, --@nHardDeleteDays tinyint = 0, --all data older than this will be deleted.

'[path to the backuplocation]', --@nvcFolder nvarchar(1024) = null, --folder for backup files

null, --@nvcValidatingServer sysname = null,

0 --@fForceBackup int = 0 –

The first 3 parameters indicate the following: Amount of hours that a completed instance will be kept in the database; Amount of days that a completed instance will be kept in the database; Amount of days that a failed instance will be kept in the database.

The first 2 (both for completed instances) will be added up, so you can configure to keep completed instances for example for 2 days and 3 hours. Everything after this time will be removed.

DB problem, disk space issues

When struggling with a huge DTA db and disk space issues, the above stored procedure which is called might give problems, because the backup of the db can’t be made anymore since there is no more space left on the disk to put the backup on.

When doing this, just to be better safe than sorry, stop all BizTalk services while this script is running and enable them again after step 3.

In this case, we need to change the DTA Purge and Archive job. Instead of calling the PurgeAndArchive procedure, we will call the Purge procedure, without having to deal with a backup of the database.

Replace the original procedure call in the step of the DTA Purge and Archive job by this:

declare @dtLastBackup datetime

set @dtLastBackup = GetUTCDate()

exec dtasp_PurgeTrackingDatabase 1, 0, 1, @dtLastBackup

And after having done this, run the job. BEWARE! This can take quite some time to finish if this script hasn’t run for quite some time. Give it some time to finish (in my case, it took about 3min to finish and it wasn’t that long since that script had run).

3. Shrink the database

This is a step that is often forgotten, but after doing the Purge of the database, we’re not finished yet. The purge will clear the rows that were filled in the DTA db, but will not remove the empty rows itself. Therefore, you might see a little change in size, but not the big change that we’re looking for.

What really matters here is shrinking the DTA database. This will remove all empty rows and elements in the database which are not in use anymore. This will free up a significant amount of space and shrink the database significantly.

Just right click on the BizTalkDTAdb and choose Tasks – Shrink – Database as pictured below.

You will be presented with a window where you can see how much space exactly will be cleared. In my case here, only 158MB will be cleared since I’ve already optimized my DB.

Just click OK and let it do its work. BEWARE again: also this can take a lot of time. In my optimization scenario, I had 2,5GB that would be cleaned and took me up to 10 minutes to execute, so be patient, it will be worth the wait.

After this is done, the window will just disappear.

In case you’ve disabled your BizTalk services/Host Instances, you can restart them here again.

4. Finish it up

Just do a quick check on the size of your database. Normally, you should see a huge difference in db size, depending how much affected your database was of course.

When having any problems or questions, shoot in the comments.

Author: Andrew De Bruyne

Tuesday, December 27, 2011

Saturday, December 10, 2011

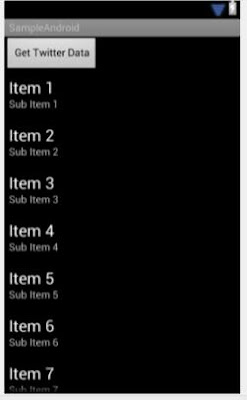

Introduction to Android App development (Devoxx 2011)

This session started with an explanation of what android is and how it was built.

Android is a platform developed by open handset alliance.

The platform is built in different layers.

- Lowest layer based on linux used for hardware

- Top of linux libraries and android runtime

- Application framework

As you all know Android development is done in JAVA, but underneath this layer lies a code which is called “dex”.

When you want to run your app on an android phone, the class files will be converted into a dex file so it can be used on any android phone (depending on the apps requirements).

When you want to run your app on an android phone, the class files will be converted into a dex file so it can be used on any android phone (depending on the apps requirements).

For each app we can reuse existing functionality and therefor a subset of java se is available and an android specific api.

Taking this into account we can do amazing things, but remember it’s just a phone.

So we cannot develop apps which need a massive amount of memory or space as this is limited on a phone. We also need to think about garbage collection.

So we cannot develop apps which need a massive amount of memory or space as this is limited on a phone. We also need to think about garbage collection.

How to start developing?

There are plugins for eclipse and emulators for testing. (http://www.android.com/ for more information on how to install the plugin)

How does an android project look like?

Some important files

Mail.xml : Describes content what to see

R.java : is the class file which is automatically generated when compiling your project. This file zwill be converted into dex code so your app can be used on an android device.

Android manifest file : this file cannot be better described as the property of the app. This file is read on installation, can prompt for access to allow connections to the internet, current location ,…

This file is also used on the android market to filter apps.

This file is also used on the android market to filter apps.

strings.xml : when displaying static fields, you create a variable here and refer to this var that will be used to display. (key-value pair)

When creating a new project, you have to create an Activity.java file. This is the root file which is called when the app is opened.

Layouts: you can create specific layouts for portrait or landscape mode.

Lifecycle of apps

Android has a set of rules to kill apps to free-up memory. So when developing apps we need to take in mind so “save” the state of our device before it will be flipped. When flipping your device, android will trigger the lifecycle so your state will be killed and if you haven’t saved the state it cannot be restored.

SQLite

This is the databases used in android. Each app will have its own database.

Hope this helps you on your way to develop your first android app.

Author : Jeroen W.

Monday, December 5, 2011

SPDY protocol

The new Google SPDY protocol is another attempt to make the web more efficient and reliable. The SPDY protocol introduces an extra layer between HTTP and TCP/IP (actually SSL/TLS) that primarily allows for multiplexing and parallelizing multiple HTTP requests over a single SSL connection.

The SPDY protocol is not some lab exercise but used in production! The Google Chrome browser uses the SPDY protocol (or should we say extension?) to communicate with most Google's applications. SPDY remains mostly a Google thing, with no adoption by other big names (except for Amazon EC2 it seems).

With SPDY, the Chromium browser needs to establish fewer SSL connections. But more importantly, the Chrome browser can launch many HTTP requests in parallel, no longer restricted by a maximum number of TCP/IP connections.

But this made me think: could this have a positive impact on how service consumers are implemented? Similarly to a browser parallizing the retrieval of web content, (web) service consumers should also try to parallize as much as possible.

The HTTP request/response model that underlies web services should not lock us into a synchronous RPC paradigm wherey a requestor blocks waiting for a response. To fully leverage this potential of parallellism, we must move to non-blocking, AJAX like model programming model.

While reading about SPDY, I encountered 2 links worth looking into:

- Recent blog entry by F5 that is a critical review of the SPDY protocol.

- Article that starts with SPDY but goes much further into wild (?) and interesting ideas to re-engineer the workings of the Internet in a more dramatic.

Author: Guy

The SPDY protocol is not some lab exercise but used in production! The Google Chrome browser uses the SPDY protocol (or should we say extension?) to communicate with most Google's applications. SPDY remains mostly a Google thing, with no adoption by other big names (except for Amazon EC2 it seems).

With SPDY, the Chromium browser needs to establish fewer SSL connections. But more importantly, the Chrome browser can launch many HTTP requests in parallel, no longer restricted by a maximum number of TCP/IP connections.

But this made me think: could this have a positive impact on how service consumers are implemented? Similarly to a browser parallizing the retrieval of web content, (web) service consumers should also try to parallize as much as possible.

The HTTP request/response model that underlies web services should not lock us into a synchronous RPC paradigm wherey a requestor blocks waiting for a response. To fully leverage this potential of parallellism, we must move to non-blocking, AJAX like model programming model.

While reading about SPDY, I encountered 2 links worth looking into:

- Recent blog entry by F5 that is a critical review of the SPDY protocol.

- Article that starts with SPDY but goes much further into wild (?) and interesting ideas to re-engineer the workings of the Internet in a more dramatic.

Author: Guy

Tuesday, November 29, 2011

How to use drag-and-drop for creating a reusable processing rule in IBM Datapower

The basic method of creating a reusable processing rule is by dragging the cursor over a part of the existing processing rule. For a very small group of actions this is fine. If you want to make the rule a bit more advanced you probably want to create the processing rule from scratch. This can be achieved by creating a ‘Call processing rule’ action and create the reusable processing rule starting from there.

The problem with this approach is that you cannot use the Datapower drag-and-drop interface you use for all other processing rules. The GUI presents you the same view you get for all processing rules in the ‘Objects’ menu. It lists the different actions, but that’s it. No overview of the used contexts on mouse-over, or other details of the actions besides their name.

A simple solution to overcome this problem is to create the reusable rule in a Multi-Procotol Gateway Policy.

Go to the page ‘Multi-Protocol Gateway Policy’ by following the link in the menu on the left (Services>Multi-Protocol Gateway>Multi-Protocol Gateway Policy).

Create a new policy that will contain all future reusable rules. In my example I called it ‘ReusableRules’.

You can open this policy and add as much rules to it as you like. For the match action you can pick any existing match (f.e. all (url=*)). This match is only used in this policy. When calling a processing rule from within another rule the match action is not used.

To call this processing rule from within the ‘Call processing rule’ action you just have to select your newly created rule in the dropdown-box as you can see in the screenshot below.

Author: Tim

Thursday, November 24, 2011

Technical: Using REST web services with 2-legged OAuth on datapower.

2-legged OAuth consists of:

• The client signs up to the server. The server & client has a shared secret.

• The client uses these key to gain access to resources on the server

What is REST ?

GET method

The client(secret=password) sends the following request to datapower.

Request: GET http://testname:1010/testname?name=KIM

Header: Authorization: OAuth oauth_nonce="12345abcde",

oauth_consumer_key="Kim",

oauth_signature_method="HMAC-SHA1",

oauth_timestamp="1319032126",

oauth_version="1.0",

oauth_signature="m2A6bZejY7smlH6OcWwaKLo7X4o="

oauth_nonce = A randomly-generated string that is unique to each API call. This, along with the timestamp, is used to prevent replay attacks.

oauth_consumer_key = the client’s key.

oauth_signature_method = the cryptographic method used to sign.

oauth_timestamp = the number of seconds that have elapsed since midnight, January 1, 1970, also known as UNIX time.

oauth_signature = The authorization signature.

On datapower a Multi-protocol gateway needs to be configured allowing requests with an empty message body.

The secret needs to be shared between client and datapower. To make everything easy I stored the shared secret in an xml file in the “local:” file system of datapower.

The next step is verifying the signature. The best way to verify the signature is recreate the signature with the shared secret and then compare the two signatures. Of course to do this, some xslt skills are recommended.

This is the signature base-string that will be signed with the shared secret.

GET&http%3A%2F%2Ftestname%3A1010%2Ftestname&name%3DKIM%26oauth_consumer_key%3DKim

How do we get to this?

If you look closely you see that there are 3 distinguished parts.

GET = the http method used.

The second part is the URL which is url-encoded.

We’ll need to percent encode all of the parameters when creating our signature. These "reserved" characters must be converted into their hexadecimal values and preceded by a % character. The rest of the characters are "unreserved" and must not be encoded.

The reserved characters are:

! * ' ( ) ; : @ & = + $ , / ? % # [ ] white-space

These are the percent-encoded values of the above characters:

%21 %2A %27 %28 %29 %3B %3A %40 %26 %3D %2B %24 %2C %2F %3F %25 %23 %5B %5D %20

Be careful with URL encoding functions built into languages, they may not encode all of the reserved characters, or may encode unreserved characters.

<xsl:variable

name="BasicUrl"

select="dp:encode(string(

dp:variable('http://testname:1010/testname’)

),'url')

"/>

The third part gets a bit more complicated to do with xslt, because the number of parameters and OAuth header fields can change.

We need to extract the Oauth header from the request.

<xsl:variable

name="OAuthParams"

select="dp:request-header('Authorization')"

/>

The URL parameters are also necessary. The parameters in this example are “name=KIM”. Put them all together and alphabetically organize them. You will get a string like this:

name=KIM&oauth_consumer_key=Kim&oauth_nonce=12345abcde&oauth_signature_method=HMAC-SHA1&oauth_timestamp=1319032126&oauth_version=1.0

And again url-encode this string using the datapower function(dp:encode).If you now concatenate the 3 parts with a “&” you will get the signature base string.

The next thing we need to calculate the signature is the shared secret. OAuth standard uses a concatenation of the shared secret & token(<secret>&<token>). Since we are working with the 2-legged OAuth we don’t need the token and we can leave it empty. So in the example we used the shared secret “password”. Important is that you add the “&” behind the secret: ”password&”. Now we have the necessary information to calculate the signature.

<xsl:variable name="Calculate_Signature"

select="dp:hmac('http://www.w3.org/2000/09/xmldsig#hmac-sha1',

‘password&’,

<SignatureBaseString>)"/>

Finally we can compare the signature of the OAuth header with our own calculated signature. Don’t forget to validate the timestamp against a predefined limit e.g. 5 minutes. If all checks out, the client is authorized to use the web service.

link to check OAuth signature

POST method is coming soon…

Author: Kim

• The client signs up to the server. The server & client has a shared secret.

• The client uses these key to gain access to resources on the server

What is REST ?

GET method

The client(secret=password) sends the following request to datapower.

Request: GET http://testname:1010/testname?name=KIM

Header: Authorization: OAuth oauth_nonce="12345abcde",

oauth_consumer_key="Kim",

oauth_signature_method="HMAC-SHA1",

oauth_timestamp="1319032126",

oauth_version="1.0",

oauth_signature="m2A6bZejY7smlH6OcWwaKLo7X4o="

oauth_nonce = A randomly-generated string that is unique to each API call. This, along with the timestamp, is used to prevent replay attacks.

oauth_consumer_key = the client’s key.

oauth_signature_method = the cryptographic method used to sign.

oauth_timestamp = the number of seconds that have elapsed since midnight, January 1, 1970, also known as UNIX time.

oauth_signature = The authorization signature.

On datapower a Multi-protocol gateway needs to be configured allowing requests with an empty message body.

The secret needs to be shared between client and datapower. To make everything easy I stored the shared secret in an xml file in the “local:” file system of datapower.

The next step is verifying the signature. The best way to verify the signature is recreate the signature with the shared secret and then compare the two signatures. Of course to do this, some xslt skills are recommended.

This is the signature base-string that will be signed with the shared secret.

GET&http%3A%2F%2Ftestname%3A1010%2Ftestname&name%3DKIM%26oauth_consumer_key%3DKim

%26oauth_nonce%3D12345abcde%26oauth_signature_method%3DHMAC-SHA1%26oauth_timestamp%3D1319032126%26oauth_version%3D1.0

How do we get to this?

If you look closely you see that there are 3 distinguished parts.

- GET&

- http%3A%2F%2Ftestname%3A1010%2Ftestname&

- name%3DKIM%26oauth_consumer_key%3DKim%26oauth_nonce%3D12345abcde%26oauth_signature_method%3DHMAC-SHA1%26oauth_timestamp%3D1319032126%26oauth_version%3D1.0

GET = the http method used.

The second part is the URL which is url-encoded.

We’ll need to percent encode all of the parameters when creating our signature. These "reserved" characters must be converted into their hexadecimal values and preceded by a % character. The rest of the characters are "unreserved" and must not be encoded.

The reserved characters are:

! * ' ( ) ; : @ & = + $ , / ? % # [ ] white-space

These are the percent-encoded values of the above characters:

%21 %2A %27 %28 %29 %3B %3A %40 %26 %3D %2B %24 %2C %2F %3F %25 %23 %5B %5D %20

Be careful with URL encoding functions built into languages, they may not encode all of the reserved characters, or may encode unreserved characters.

<xsl:variable

name="BasicUrl"

select="dp:encode(string(

dp:variable('http://testname:1010/testname’)

),'url')

"/>

The third part gets a bit more complicated to do with xslt, because the number of parameters and OAuth header fields can change.

We need to extract the Oauth header from the request.

<xsl:variable

name="OAuthParams"

select="dp:request-header('Authorization')"

/>

The URL parameters are also necessary. The parameters in this example are “name=KIM”. Put them all together and alphabetically organize them. You will get a string like this:

name=KIM&oauth_consumer_key=Kim&oauth_nonce=12345abcde&oauth_signature_method=HMAC-SHA1&oauth_timestamp=1319032126&oauth_version=1.0

And again url-encode this string using the datapower function(dp:encode).If you now concatenate the 3 parts with a “&” you will get the signature base string.

The next thing we need to calculate the signature is the shared secret. OAuth standard uses a concatenation of the shared secret & token(<secret>&<token>). Since we are working with the 2-legged OAuth we don’t need the token and we can leave it empty. So in the example we used the shared secret “password”. Important is that you add the “&” behind the secret: ”password&”. Now we have the necessary information to calculate the signature.

<xsl:variable name="Calculate_Signature"

select="dp:hmac('http://www.w3.org/2000/09/xmldsig#hmac-sha1',

‘password&’,

<SignatureBaseString>)"/>

Finally we can compare the signature of the OAuth header with our own calculated signature. Don’t forget to validate the timestamp against a predefined limit e.g. 5 minutes. If all checks out, the client is authorized to use the web service.

link to check OAuth signature

POST method is coming soon…

Author: Kim

Tuesday, November 15, 2011

Exposing a webservice in JBoss ESB

Webservice-based communication is of course at the core of SOA and as such the support should be easy and robust.

As the video demonstrates it is very easy to expose a service as a webservice and optionally demand validation.

Additionally it shows you how to enable WS-Security.

There are other ways to work with webservices:

- you can expose native jboss webservices

- create proxy webservices

- poll remote webservices

Link: http://www.youtube.com/watch?v=xflkWQZZsHE

Author: Alexander Verbruggen

As the video demonstrates it is very easy to expose a service as a webservice and optionally demand validation.

Additionally it shows you how to enable WS-Security.

There are other ways to work with webservices:

- you can expose native jboss webservices

- create proxy webservices

- poll remote webservices

Link: http://www.youtube.com/watch?v=xflkWQZZsHE

Author: Alexander Verbruggen

Monday, November 14, 2011

OAuth

With the upcoming Devoxx conference, I did some reading last weekend. With Fri Nov 11 as a national holiday in Belgium - because of the end of World War I - I had some extra time. Looked a bit into most recent development around HTML 5 and Android development.

Quickly I ended up diving deeper into REST. Must confess that I was very WS-* minded and was not really impressed by REST initially. But with the incompleteness of WS-* and the success of REST, I'm changing my mind.

So I ended up browsing through the book "Restful Java with JAX-RS". This REST stuff triggered me into looking into different REST API's, including the one from Dropbox. And Dropbox security is based on OAuth, which triggered me to dive (back) into OAuth.

So I ended up browsing through the book "Restful Java with JAX-RS". This REST stuff triggered me into looking into different REST API's, including the one from Dropbox. And Dropbox security is based on OAuth, which triggered me to dive (back) into OAuth.

Looked for an OAuth book on Safari and Amazon, but none (yet?) avaialble. So I ended up re-reading chapter 9 of the the book "REST in practice". By the way, very good book, I like it. Some great links while looking around:

- The introduction on OAuth

- Good OAuth introduction by Yahoo

- Google Oauth Playground, so see OAuth live in action

While looking into OAuth, I started making the comparison with WS-Security and SAML in particular. With OAuth, no XML signing nor XML canonicalization, the option to use HMAC instead of keypairs and certificates. So simpler, but not simple!

Note: one of my I8C colleagues (Kim) just finished project on DataPower appliance to implement OAuth support

Author: Guy

Quickly I ended up diving deeper into REST. Must confess that I was very WS-* minded and was not really impressed by REST initially. But with the incompleteness of WS-* and the success of REST, I'm changing my mind.

So I ended up browsing through the book "Restful Java with JAX-RS". This REST stuff triggered me into looking into different REST API's, including the one from Dropbox. And Dropbox security is based on OAuth, which triggered me to dive (back) into OAuth.

So I ended up browsing through the book "Restful Java with JAX-RS". This REST stuff triggered me into looking into different REST API's, including the one from Dropbox. And Dropbox security is based on OAuth, which triggered me to dive (back) into OAuth.Looked for an OAuth book on Safari and Amazon, but none (yet?) avaialble. So I ended up re-reading chapter 9 of the the book "REST in practice". By the way, very good book, I like it. Some great links while looking around:

- The introduction on OAuth

- Good OAuth introduction by Yahoo

- Google Oauth Playground, so see OAuth live in action

While looking into OAuth, I started making the comparison with WS-Security and SAML in particular. With OAuth, no XML signing nor XML canonicalization, the option to use HMAC instead of keypairs and certificates. So simpler, but not simple!

Note: one of my I8C colleagues (Kim) just finished project on DataPower appliance to implement OAuth support

Author: Guy

Tuesday, November 8, 2011

jBPM Orchestration in JBoss ESB

The third installment in the JBoss ESB video series features the jBPM module which introduces service orchestration and human task flows.

The first video shows you how to set up a simple jBPM process:

Link: http://www.youtube.com/watch?v=jFBEqhAuOCw

The second one covers a few additional topics like variables passing back and forth between jBPM and the ESB services as well as user-driven branching:

Link: http://www.youtube.com/watch?v=9Mhd7VO0-oA

Author: Alexander Verbruggen

The first video shows you how to set up a simple jBPM process:

Link: http://www.youtube.com/watch?v=jFBEqhAuOCw

The second one covers a few additional topics like variables passing back and forth between jBPM and the ESB services as well as user-driven branching:

Link: http://www.youtube.com/watch?v=9Mhd7VO0-oA

Author: Alexander Verbruggen

Wednesday, November 2, 2011

Custom java service in JBoss ESB

This second video in the JBoss ESB series demonstrates how to create a simple custom java service that prints out the message content it receives.

Link: http://www.youtube.com/watch?v=TfgUW0D-aAo

Author: Alexander Verbruggen

Thursday, October 27, 2011

Oracle Openworld 2011 through he eyes of an I8C employee

In the beginning of October I was one of the 45,000 attendees from 117 countries.

If someone would ask me next year what was on the 2011 Open World I will definitely remember these key words: CLOUD and EXA.

As mentioned before Larry Ellison also announced the Oracle Public Cloud. Their cloud offering is mixed in that way that it offers a SAAS model for CRM and HCM fusion applications and Oracle Database and a PAAS offering for Custom Java applications. Maybe the riot with Salesforce was only to get extra attention for this anouncement ;-)

While the cloud wars continued I was trying to get a roadmap for fusion middleware for the next years. I however could not seem to find it, which was a pity.

A keynote that did provide a roadmap was at JavaOne. For java 8 the most interesting items are for me: addition of lambda expressions, Java modularity and completion of the HotSpot/JRockit JVM convergence project. Also nice to hear was that Twitter will join the OpenJDK!

At openworld some of the most interesting sessions I followed were:

Author: Jeroen V.

If someone would ask me next year what was on the 2011 Open World I will definitely remember these key words: CLOUD and EXA.

Exalytics and Supercluster were anounced during several keynotes. These new children of the EXA family will allow Oracle even better to power private clouds and their own public cloud. These machines are impressive and they are definitive an asset to your corporation ... if you can pay for them :-) In any case the specs for Exalogic, Exadata and Supercluster are pretty amazing. Who would have thought a few years back that you could have a machine that can load a few TB of data in memory? |  |

As mentioned before Larry Ellison also announced the Oracle Public Cloud. Their cloud offering is mixed in that way that it offers a SAAS model for CRM and HCM fusion applications and Oracle Database and a PAAS offering for Custom Java applications. Maybe the riot with Salesforce was only to get extra attention for this anouncement ;-)

While the cloud wars continued I was trying to get a roadmap for fusion middleware for the next years. I however could not seem to find it, which was a pity.

A keynote that did provide a roadmap was at JavaOne. For java 8 the most interesting items are for me: addition of lambda expressions, Java modularity and completion of the HotSpot/JRockit JVM convergence project. Also nice to hear was that Twitter will join the OpenJDK!

At openworld some of the most interesting sessions I followed were:

- A hands on workshop for Oracle CEP: this is a really interesting tool. Even though you can freely download and play with it, it is nice to just walk in a room to play with the tool and get all the information that you want, straight from the PTS guys.

- A more in depth talk on Oracle Coherence gave me some more insight in this product. A distributed cache is something that is used in many applications nowadays, mainly to boost performance to new limits. In middleware and more specifically in ESB solutions this technology is interesting to cache configuration data, offload frequently used data from the database, cache service results and sometimes it is even used for messaging.

- Continuous integration for SOA and BPM projects: this was a very interesting session on how to do more Test Driven Development (TDD) in an Oracle SOA environment. They also showed how to use popular tools like ant and Jenkins.

- There were also some panel sessions during the conference. The most interesting I found the one on OSB and how to tune this ESB for peak performance. By the questions from the audience you can really see that this ESB is widely used in massive deployments.

Author: Jeroen V.

Monday, October 24, 2011

Random errors when using MQSeries adapter in Clustered Environment

In the following article, we will be describing an error that we've encountered in a clustered MSCS Cluster with MQSeries installed, using Microsoft BizTalk 2006 R2.

This means that, when an problem occurs and the message needs to be resent, there will be a minimum delay of 1 minute on every message. In an environment where large amounts of messages are being processed, this can be a huge painpoint.

The problem is that the default TCP Keep Alive interval in Windows seems to be 2 hours. So when a Keep Alive package is sent towards the cluster through an idle connection with more than 1 hour idle time, it will return an error since the TCP session on the network is already closed.

Obviously, the most logical thing to do to avoid these errors is changing the Keep Alive timeout time on the Windows (BizTalk) servers. Tis can be done by changing a registry value on your BizTalk servers, followed by a reboot.

The following link describes which value to set:

http://technet.microsoft.com/en-us/library/cc782936(WS.10).aspx

The Problem

Well, it's not exactly one error we've encountered. The problem actually exists of multiple random warnings that seem to be popping up in the event viewer when using the MQSeries adapter (using the MQSAgent2 COM+ component) whilst sending messages towards this adapter. Strangely enough, we also noticed these warnings popping up when there is absolutely no traffic going on on the server.Some samples of errors:

The adapter "MQSeries" raised an error message. Details "The remote procedure call failed and did not execute. (Exception from HRESULT: 0x800706BF)"

--

The adapter "MQSeries" raised an error message. Details "Unable to cast object of type 'System.__ComObject' to type 'Microsoft.BizTalk.Adapter.MQS.Agent.MQSProxy'."

--

The adapter failed to transmit message going to send port "prtSendSecLendMsgStatusSLT_MQSeries" with URL "MQS://BDAMCAPP100/MQPRD205/FIAS.QL.SLT_BT_IN.0001". It will be retransmitted after the retry interval specified for this Send Port. Details:"The remote procedure call failed and did not execute. (Exception from HRESULT: 0x800706BF)".

--

The adapter "MQSeries" raised an error message. Details "Unable to cast object of type 'System.__ComObject' to type 'Microsoft.BizTalk.Adapter.MQS.Agent.MQSProxy'."

--

The adapter failed to transmit message going to send port "prtSendSecLendMsgStatusSLT_MQSeries" with URL "MQS://BDAMCAPP100/MQPRD205/FIAS.QL.SLT_BT_IN.0001". It will be retransmitted after the retry interval specified for this Send Port. Details:"The remote procedure call failed and did not execute. (Exception from HRESULT: 0x800706BF)".

The Impact

It might seem that these warnings are "just" warnings, but whenever one of these warnings is encountered, it causes the sending of messages towards the MQSeries adapter to fail. This will trigger the BizTalk retry mechanism which works with a certain amount of retries. These retries can be scheduled by minutes, where the lowest value of this configuration is 1.This means that, when an problem occurs and the message needs to be resent, there will be a minimum delay of 1 minute on every message. In an environment where large amounts of messages are being processed, this can be a huge painpoint.

The Solution

After investigation, we seem to have found that the source of this issue can be found on network level of the servers. Idle TCP sessions on the network of the client are closed within 1 hour. This seems to be a common default setting on a lot of environments, and is certainly not a bad thing.The problem is that the default TCP Keep Alive interval in Windows seems to be 2 hours. So when a Keep Alive package is sent towards the cluster through an idle connection with more than 1 hour idle time, it will return an error since the TCP session on the network is already closed.

Obviously, the most logical thing to do to avoid these errors is changing the Keep Alive timeout time on the Windows (BizTalk) servers. Tis can be done by changing a registry value on your BizTalk servers, followed by a reboot.

The following link describes which value to set:

http://technet.microsoft.com/en-us/library/cc782936(WS.10).aspx

Good luck!

Andrew De Bruyne

Andrew De Bruyne

Sunday, October 16, 2011

Installing an old E1 adapters in recent versions of webMethods

Let’s say you have a current webMethods version 6.5 and you’re upgrading to version 8, but all subsystems are not upgrading.

The webMethods Enterprise one adapter (8.96 one-off Adapter for XPI 8.94) which came with the installation of webMethods 6.5 will be replaced when you install a new Enterprise one adapter in version 8.

It can be that your JDE environment is old and that the adapter of version 6.5 is the latest supported version. How can it be used now on webMethods version 8.

First step: Export the current Enterprise one adapter from version 6.5 (Developer -> export) and save it on your machine.

Now copy this export in the replicate -> inbound folder of your new version 8 installation.

Before you can install this package, you need to install the PSFT_PackageManagement package first.

Once that package is installed, open it’s pub page.

Click install inbound releases and select your exported zip file.

Select that exported zip to be installed and click save changes

The Enterprise one adapter from version 6.5 is now installed and your new webMethods version 8 installation can work with old JDE systems.

Author : JeroenW

The webMethods Enterprise one adapter (8.96 one-off Adapter for XPI 8.94) which came with the installation of webMethods 6.5 will be replaced when you install a new Enterprise one adapter in version 8.

It can be that your JDE environment is old and that the adapter of version 6.5 is the latest supported version. How can it be used now on webMethods version 8.

First step: Export the current Enterprise one adapter from version 6.5 (Developer -> export) and save it on your machine.

Now copy this export in the replicate -> inbound folder of your new version 8 installation.

Before you can install this package, you need to install the PSFT_PackageManagement package first.

Once that package is installed, open it’s pub page.

Click install inbound releases and select your exported zip file.

Select that exported zip to be installed and click save changes

The Enterprise one adapter from version 6.5 is now installed and your new webMethods version 8 installation can work with old JDE systems.

Author : JeroenW

Tuesday, October 11, 2011

TUCON2011

Last week TUCON2011 took place in Las Vegas, USA. As we are the only TIBCO partner, with its HQ in Belgium, Integr8 Consulting could not be absent this year! So together with my colleague, we went out to the far-west of the USA.

This year TUCON started with the keynote speakers and the BIG idea track. The focus of these BIG idea tracks is to hear from TIBCO customers and TIBCO visionaries, how they are using TIBCO technologies and how it provides them with the 2-second advantage. For those out there not knowing what the 2-second advantage is, here is a link to a video from Vivek (TIBCO CEO) explaining the 2-second advantage.

Putting the right information on the right time in the right context

These are my 4 keywords I remember from my TUCON visit:

This brings me to the second keyword, context. One of the keynote speakers stated ‘what if you have a million of data events, but you can’t place it’. And that’s what it’s all about. If you can place the data at the right time in the right context, it will provide you with a 2-second advantage.

The third keyword is Silver. Silver is TIBCO’s brand name for its cloud services like you have ActiveMatrix for the SOA/BPM platform. Next to Tibbr, Silver will become a focus point for the future. You will get Silver Mobile, Silver Fabric, Silver Spotfire and many more. All providing you with Cloud services to start with Tibco technology in just a matter of seconds (at least in theory).

Now the fourth option will look a bit strange but this might become the biggest shifting of enterprise communication since the rise of the email (maybe a bit exaggerated) . With the upcoming boost of the smartphones, ipads, smart devices (like smart grid readers, which read your electricity usages, and send it to your electricity provider), new technologies are needed that will make it possible to use your mobile device as…. well as a workstation. If you’re interested on how Silver Mobile will work:

In the sessions of Orange, we saw a M2M (Machine-2-Machine) example on how Orange is using mobile communication technology. Now imagine about the possibilities when you think again about ‘context’. You can analyze the date from a mobile device and correlate this context with build in embedded devices like GPS, etc… to provide you with … the context of the data.

To conclude, enterprises will have to adapt their architectures to provide more and more a context-aware mobility delivery architecture in order to ‘please’ there customers.

Announcements

Next to the BIG idea tracks, day 2 and 3 are technology tracks that provided us with some insights of the current developments done by TIBCO. Now I will start this chapter, like TIBCO started each session. Every information provided is purely informational and does not legally bound us / TIBCO to any delivery

I tried to follow a diverse schema trying to know as much as I can and these are the things that are still in my mind:

If you visited TUCON this year, maybe you joined some other sessions and got other ideas then myself. Please share your experiences. By sharing information and putting it in the right context, we can get that 2-second advantage!

Author: Günther

This year TUCON started with the keynote speakers and the BIG idea track. The focus of these BIG idea tracks is to hear from TIBCO customers and TIBCO visionaries, how they are using TIBCO technologies and how it provides them with the 2-second advantage. For those out there not knowing what the 2-second advantage is, here is a link to a video from Vivek (TIBCO CEO) explaining the 2-second advantage.

Putting the right information on the right time in the right context

These are my 4 keywords I remember from my TUCON visit:

- Tibbr

- Context

- Silver

- Mobility

This brings me to the second keyword, context. One of the keynote speakers stated ‘what if you have a million of data events, but you can’t place it’. And that’s what it’s all about. If you can place the data at the right time in the right context, it will provide you with a 2-second advantage.

The third keyword is Silver. Silver is TIBCO’s brand name for its cloud services like you have ActiveMatrix for the SOA/BPM platform. Next to Tibbr, Silver will become a focus point for the future. You will get Silver Mobile, Silver Fabric, Silver Spotfire and many more. All providing you with Cloud services to start with Tibco technology in just a matter of seconds (at least in theory).

Now the fourth option will look a bit strange but this might become the biggest shifting of enterprise communication since the rise of the email (maybe a bit exaggerated) . With the upcoming boost of the smartphones, ipads, smart devices (like smart grid readers, which read your electricity usages, and send it to your electricity provider), new technologies are needed that will make it possible to use your mobile device as…. well as a workstation. If you’re interested on how Silver Mobile will work:

- Silver Mobile will provide you with a platform that runs on your Android, iPhone or BlackBerry. Using the platform, you will receive a common API that you can use in your mobile framework (jQuery Mobile, etc…) when building your own app

- Using Silver Fabric you can push, from the cloud, your apps to the Silver Mobile platform on your company’s iphones, blackberries, or androids.

- The example shown on TUCON was showing the status of your BW applications on your iphone. In case something went down, you got a notification using the native notification bus from your mobile device.

In the sessions of Orange, we saw a M2M (Machine-2-Machine) example on how Orange is using mobile communication technology. Now imagine about the possibilities when you think again about ‘context’. You can analyze the date from a mobile device and correlate this context with build in embedded devices like GPS, etc… to provide you with … the context of the data.

To conclude, enterprises will have to adapt their architectures to provide more and more a context-aware mobility delivery architecture in order to ‘please’ there customers.

Announcements

Next to the BIG idea tracks, day 2 and 3 are technology tracks that provided us with some insights of the current developments done by TIBCO. Now I will start this chapter, like TIBCO started each session. Every information provided is purely informational and does not legally bound us / TIBCO to any delivery

I tried to follow a diverse schema trying to know as much as I can and these are the things that are still in my mind:

- The ActiveMatrix platform will be extended with a rule engine, TIBCO ActiveMatrix Decisions. This product is build based on BusinessEvents and exposes it rules as services which can be used for example in a BPM process of ActiveMatrix BPM

- TIBCO ActiveSpaces 2.0 data grid. Woow, was I overwhelmed with this technology. To be honest, I didn’t really know this technology, but from what I’ve seen on TUCON, I immediately want to start with it!

- BusinessWorks plugins. Instead of adapter based technology, TIBCO is coming more and more with external plugins that can enhance BusinessWorks. Examples are tibbr, SalesForce, ActiveSpaces and an Aspect plugin that you could use for AOP programming in BW!

- Hawk! What Hawk?? Yes indeed, Hawk is coming with a nice web interface that will provide you with a better overview of your TIBCO Administrative domains. And as most products will do, Hawk will also integrate with Tibbr and maybe Spotfire in the future.

- Nimbus. This newly acquired technology provides you with a tool that can be used for documenting your business processes in a way your business can understand them. Don’t see it as an automation engine but rather as a tool for documenting (discovering) your business process. If TIBCO will connect AMX BPM and Nimbus, information is exchanged between them, it might become a strong product bundle.

- TIBCO EMS / FTL. During the engineering roundtable, it came to a discussion on how the future will look like for messaging. In the end there is no real answer. EMS is still the messaging solution if you want a guaranteed reliable messaging solution. FTL is the future for TIBCO if it concerns really fast messaging.

If you visited TUCON this year, maybe you joined some other sessions and got other ideas then myself. Please share your experiences. By sharing information and putting it in the right context, we can get that 2-second advantage!

Author: Günther

Tuesday, September 20, 2011

JBoss ESB - A simple file poller

A while ago I took JBoss ESB for a spin to see how it compares to other tools we frequently use (both in terms of features and usability). I created a few how-to videos which can be used as a reference to implement some common usecases. Though they assume a working JBoss ESB server and the appropriate eclipse tooling, they are generally pretty straight forward.

This first video demonstrates how to set up a (very) simple file poller. Subsequent tutorials will highlight more advanced features like XSLT transformations, jBPM and some smooks-based csv parsing. Please note that it is best viewed at 1080p or original resolution.

Link: http://www.youtube.com/watch?v=GOKFl79W1To

Author: Alexander Verbruggen

Saturday, September 10, 2011

Migrating Trading Networks data between v6.5 and v8 on different servers

The client I’m currently working for requires a new webMethods 8 environment, but this has to be a copy of an existing 6.5 environment, which should stay. They created new servers so I could install all required components on this new machine for a version 8 with Trading Networks.

They required a clean install, but needed to have the code and TN data from the version 6.5 on the new version 8 installation. The installation itself was easy, just as the setup of the environments (e.g. ports, security, LDAP, …)

They required a clean install, but needed to have the code and TN data from the version 6.5 on the new version 8 installation. The installation itself was easy, just as the setup of the environments (e.g. ports, security, LDAP, …)

When I came to the point where I wanted to migrate the Trading Networks data, I came up with some issues. The biggest problem was that we have a current table wm65tn which holds all the data and we had to create a new one wmTN for the version 8, as they still want to use the version 6.5.

The first thing I tried was to deploy the Trading networks settings. This was already an issue as the old environment was behind a firewall and this needed to be opened for traffic towards the new version 8 environment. Once the firewall was opened I could finally deploy all TN settings, but this wouldn’t work.

WebMethods Deployer won’t deploy TN data between different versions (6.5 and 8).

Next I tried to Export and Import all data into the new version. Again the same issue appeared, Not allowed to copy data between 6.5 and 8.

My next option was to install a second database version 6.5, copy all the data there and then run a migration for that table.

So with this last step in mind I started. First bumble on the road, the disk where all tables was stored didn’t have enough free space. After creating the required space I could create the database. (wmTN) After the database was created. I disconnected the old version 6.5 table and copied the MDF and LDF file (SQL server).

Now we need to disconnect the wmTN table and rename the log files to the required log file names which are linked with this table.

Now the new database has the same data as the old one. So we can connect the tables again to the SQL server.

Next step is to upgrade the database according to the webmethods documentation.

Unfortunatly there was a step which mentioned “if errors occured, contact Software AG Customer Care” and yes lucky me, I can contact software AG.

For getting this error :

[wm-cjdbc40-0034][SQLServer JDBC Driver][SQLServer]Foreign key 'fk_PtnrUser_PtnrID' references invalid table 'Partner'.

MIGRATE TRADINGNETWORKS [31] FAILED

After some mails and telephone calls with webMethods support the issue is still not resolved.

They managed to solve the foreign key issue by just removing this, but when that issue is solved we got this issue.

*** Data Migration from TN 6.5 to TN 7.1 started...

* Creating Partner-User mappings...

java.sql.SQLException: [wm-cjdbc36-0007][SQLServer JDBC Driver][SQLServer]Invali d object name 'PartnerUser'.

at com.wm.dd.jdbc.base.BaseExceptions.createException(Unknown Source)

at com.wm.dd.jdbc.base.BaseExceptions.getException(Unknown Source)

at com.wm.dd.jdbc.sqlserver.tds.TDSRequest.processErrorToken(Unknown Sou

rce)

at com.wm.dd.jdbc.sqlserver.tds.TDSRequest.processReplyToken(Unknown Sou

rce)

at com.wm.dd.jdbc.sqlserver.tds.TDSRPCRequest.processReplyToken(Unknown

Source)

at com.wm.dd.jdbc.sqlserver.tds.TDSRequest.processReply(Unknown Source)

at com.wm.dd.jdbc.sqlserver.SQLServerImplStatement.getNextResultType(Unk

nown Source)

at com.wm.dd.jdbc.base.BaseStatement.commonTransitionToState(Unknown Sou

rce)

at com.wm.dd.jdbc.base.BaseStatement.postImplExecute(Unknown Source)

at com.wm.dd.jdbc.base.BasePreparedStatement.postImplExecute(Unknown Sou

rce)

at com.wm.dd.jdbc.base.BaseStatement.commonExecute(Unknown Source)

at com.wm.dd.jdbc.base.BaseStatement.executeUpdateInternal(Unknown Sourc

e)

at com.wm.dd.jdbc.base.BasePreparedStatement.executeUpdate(Unknown Sourc

e)

at com.wm.app.tn.util.migrate.migratedata_to_tn_71.createPartnerUsers(mi

gratedata_to_tn_71.java:137)

at com.wm.app.tn.util.migrate.migratedata_to_tn_71.main(migratedata_to_t

n_71.java:47)

This issue is related to the partner table (which holds all the partner profiles). The first thought was that it was related to the unknown profile because it has an id of 00000000000000. But the contact person of SAG confirmed that this wasn’t the issue as this is the default entry that comes with every TN database.

At this moment the ticket is already open for more than one week and still no solution found.

Today I received another call from SAG and they said to have found the issue. I recovered the original 6.5 TN database. So I could follow the correct guidelines from SAG without touching anything to the table’s structure (removing foreign keys).

The problem was that the original 6.5 database was created with a user specific for this table and the table had a schema defined.

Solution:

I had to create a user which had a default schema equal to wm65tn (which was the old value) and then run the upgrade script via the command line.

dbConfigurator.bat -a migrate -d sqlserver -c TradingNetworks -v latest -l <db_server_URL> -u <existing_db_user> -p <password> -fv 10

Now the table was upgraded to version 8 and all new tables have the correct schema (wm65tn).

Now run this command migratedata_from_tn_7-1 6.5 from the correct folder as this copies the data from the old table to the correct new table. (all partner profiles, agreements, …)

E.g : Partner to PartnerUser

Now a restart of the environment is needed, unless your environment was already down, and the data is available in TN version 8.

Author : Jeroen W.

Tuesday, September 6, 2011

BizTalk is dead, long live BizTalk

On the WPC (World Partner Conference) 2011, Microsoft revealed it’s future plans for their integration stack. It seems that BizTalk as product name will disappear in the nearby future…

A new integration platform (stack) is being built in the cloud, offering us most of the BizTalk functionality. The same stack will also be made available on-premise, thus replacing BizTalk as we know it today.

It is up to us now, to learn utilizing the new integration stack in the cloud as on-premise. This will keep us busy for the next 5 to 10 years, I presume.

Does this mean that BizTalk will no longer be supported? No! It just means that the integration stack as we know it today will remain fairly unchanged (they keep it aligned with their Windows, SQL and .NET stack) until the new product becomes available on-premise. How long will this take? I assume we will see the new on-premise product within 2 to 3 years. The name of this new product is not yet determined, so maybe “BizTalk” as name may continue to live J

This link gives a good overview of what we may expect. And the WPC presentation video.

Author: Koen

Tuesday, August 30, 2011

JVM performance tuning part 3: JVM tuning

The Java virtual machine can be tuned in several ways. The three most important ones will be discussed in this blog entry:

Java heap space

As discussed in an earlier blog post, objects reside in the java heap space. The more objects exist, the more heap space will be needed. So thus the most important part of JVM tuning is sizing the heap correctly. Only after changing the heap to the correct size, you can start playing with garbage collection options in order to further improve performance to reach specific goals.

For java server application Oracle recommends the following regarding java heap size:

Unless you have problems with pauses, try granting as much memory as possible to the virtual machine. The default size (64MB) is often too small.

Setting -Xms and -Xmx to the same value increases predictability by removing the most important sizing decision from the virtual machine. On the other hand, the virtual machine can't compensate if you make a poor choice.

Be sure to increase the memory as you increase the number of processors, since allocation can be parallelized.This means that, as soon as you start getting the error java.lang.OutOfMemoryError: Heap space it’s time to increase the heap. The easiest way to size correctly is by monitoring garbage collection, but this will be discussed in the next blog entry.

Sizing the heap can be done using the parameters –Xms and –Xmx, defining the minimum and maximum heap space respectively. –Xms is also called committed memory. –Xmx is also called reserved memory, although only the committed memory will be asked to the operating system at startup of the virtual machine. The difference between maximum and minimum memory is called virtual memory.

For server applications it’s best to set –Xms equal to –Xms. This allows the JVM to reserve all the necessary virtual memory at startup of the virtual machine. The best way to explain this is using an example:

suppose we have a server application that needs 800Mb of java heap after startup. If we would put –Xms256MB and –Xmx1024MB the JVM will ask the operating system 256MB of virtual memory for the heap just after creation of the virtual machine. As the server starts and needs more and more memory, the virtual machine will ask the operating system for more memory. Then you can only hope the operating system is able to give more memory. If not, the JVM starts throwing a java.lang.OutOfMemoryError. If we would put –Xms1024MB and –Xmx1024MB the JVM will ask the operating system enough virtual memory to create the maximum heap size. If that’s not possible the JVM simply won’t start, but you won’t get possible memory errors (related to resizing the heap) while the JVM is running.

By setting –Xms = -Xmx the JVM will start faster (no overhead added to allocate new memory blocks during startup, since the whole block will be reserved at startup).

Another advantage of setting –Xms equal to –Xmx is a reduction in the number of garbage collections, but adding larger pause times for each garbage collection.

Another rule is that the larger the heap size, the larger the pause times will be.

Garbage collection tuning

Since JDK 1.5 update 6, four different types of garbage collectors are available:

The serial is the default collector where both minor and major collections happen in a “stop the world” way. As the name implies garbage collections run serial and are only using one CPU core, even if more of them are available.

A young generation collection is done by copying the live object from the “Eden space” to the empty survivor space (“To space” in the figures). Objects that are too big for the survivor space are directly copied to the tenured space. Relative young objects in the other survivor space (“From space”) will be copied to the other survivor space (“To space”), while relative old objects will be copied to the tenured space. This also happens for all the other objects in the “Eden space” or “From space” when the “To space” becomes too small. Objects that are still in the “Eden space” or the “From space” after the copy operation, are dead objects and can be swept.

An old generation collection makes use of a mark-sweep-compact algorithm. The mark phase determines the live objects. The sweep phase erases the dead objects. During compaction the objects that are still live will be slide to the beginning of the tenured space. The result is a “full” region and an “empty” region in the tenured space. New objects too large for the “Eden space” can be allocated directly in the “empty” part of the tenured space.

The serial collector can be activated by using –XX:+UseSerialGC. This collector is good for client side applications that have no strong pause constraints.

The parallel or throughput collector

The design of this GC is focused on the use of more CPU cores during garbage collection instead of leaving the other cores unused while one is doing all the garbage collection.

The young generation collector makes use of a parallel variant of the serial collector. Although it makes use of multiple cores it’s still a “stop the world” garbage collector. The use of multiple cores decreases total GC time and increased the throughput.

The Concurrent Mark Sweeper

The focus of this GC is on reducing pause times rather than improving the throughput. Some java servers require large heap space, leading to major collections that can take a while to complete. This behavior introduces large pause times. That’s why this GC has been introduced.

The young generation collection is done the same way as a young generation collection in the throughput collector.

The biggest part of the old generation collection occurs in parallel with the application threads, resulting in shorter pause times. The CMS will start a GC before the tenured space will be full. Its goal is to perform a GC before the tenured space will have no more space left. Due to fluctuations in the load of a server the tenured space can be filled more quickly than the CMS GC can be ended. At that moment the CMS GC will stop and a serial GC will take place. The CMS has 3 major phases:

The CMS GC doesn’t make use of compacting, resulting in less time spent during GC, but adding additional cost during object allocation.

Another disadvantage is that this GC introduces floating GC. During the concurrent mark phase application threads are still running, resulting in live objects moving to the tenured space and becoming dead objects. These dead objects can only be cleaned during the next GC. As a result this GC requires additional heap space.

The CMS can be activated by using -XX:+UseConcMarkSweepGC

The collector is efficient on machines with more than one CPU and for applications requiring low pause times rather than high throughput. It’s also possible to enable CMS for the permgen space by adding -XX:+CMSPermGenSweepingEnabledJVM Ergonomics

JVM ergonomics have been introduced since JDK 5 and makes the JVM doing some kind of “self tuning”. This is partially based on the underlying platform (hardware, OS, …). Based on the platform a specific GC and heap size will be chosen automatically. On the other hand it’s possible to define a desired behavior (pause time and throughput), resulting in the JVM sizing its heap automatically to meet the desired behavior as good as possible.

Regarding the platform the JVM makes a distinction between a client and a server class machine. A server class machine is a machine with at least 2 CPU’s and +2GB RAM.

In case of a server class machine the following options will be chosen automatically:

- Java heap space

- Garbage collection tuning

- Garbage collection ergonomic

Java heap space

As discussed in an earlier blog post, objects reside in the java heap space. The more objects exist, the more heap space will be needed. So thus the most important part of JVM tuning is sizing the heap correctly. Only after changing the heap to the correct size, you can start playing with garbage collection options in order to further improve performance to reach specific goals.

For java server application Oracle recommends the following regarding java heap size:

Unless you have problems with pauses, try granting as much memory as possible to the virtual machine. The default size (64MB) is often too small.

Setting -Xms and -Xmx to the same value increases predictability by removing the most important sizing decision from the virtual machine. On the other hand, the virtual machine can't compensate if you make a poor choice.

Be sure to increase the memory as you increase the number of processors, since allocation can be parallelized.This means that, as soon as you start getting the error java.lang.OutOfMemoryError: Heap space it’s time to increase the heap. The easiest way to size correctly is by monitoring garbage collection, but this will be discussed in the next blog entry.

Sizing the heap can be done using the parameters –Xms and –Xmx, defining the minimum and maximum heap space respectively. –Xms is also called committed memory. –Xmx is also called reserved memory, although only the committed memory will be asked to the operating system at startup of the virtual machine. The difference between maximum and minimum memory is called virtual memory.

For server applications it’s best to set –Xms equal to –Xms. This allows the JVM to reserve all the necessary virtual memory at startup of the virtual machine. The best way to explain this is using an example:

suppose we have a server application that needs 800Mb of java heap after startup. If we would put –Xms256MB and –Xmx1024MB the JVM will ask the operating system 256MB of virtual memory for the heap just after creation of the virtual machine. As the server starts and needs more and more memory, the virtual machine will ask the operating system for more memory. Then you can only hope the operating system is able to give more memory. If not, the JVM starts throwing a java.lang.OutOfMemoryError. If we would put –Xms1024MB and –Xmx1024MB the JVM will ask the operating system enough virtual memory to create the maximum heap size. If that’s not possible the JVM simply won’t start, but you won’t get possible memory errors (related to resizing the heap) while the JVM is running.

By setting –Xms = -Xmx the JVM will start faster (no overhead added to allocate new memory blocks during startup, since the whole block will be reserved at startup).

Another advantage of setting –Xms equal to –Xmx is a reduction in the number of garbage collections, but adding larger pause times for each garbage collection.

Another rule is that the larger the heap size, the larger the pause times will be.

Garbage collection tuning

Since JDK 1.5 update 6, four different types of garbage collectors are available:

- The serial collector (the default one)

- The parallel or throughput collector

- The parallel compacting collector

- The Concurrent Mark Sweep collector

The serial is the default collector where both minor and major collections happen in a “stop the world” way. As the name implies garbage collections run serial and are only using one CPU core, even if more of them are available.

A young generation collection is done by copying the live object from the “Eden space” to the empty survivor space (“To space” in the figures). Objects that are too big for the survivor space are directly copied to the tenured space. Relative young objects in the other survivor space (“From space”) will be copied to the other survivor space (“To space”), while relative old objects will be copied to the tenured space. This also happens for all the other objects in the “Eden space” or “From space” when the “To space” becomes too small. Objects that are still in the “Eden space” or the “From space” after the copy operation, are dead objects and can be swept.

An old generation collection makes use of a mark-sweep-compact algorithm. The mark phase determines the live objects. The sweep phase erases the dead objects. During compaction the objects that are still live will be slide to the beginning of the tenured space. The result is a “full” region and an “empty” region in the tenured space. New objects too large for the “Eden space” can be allocated directly in the “empty” part of the tenured space.

The serial collector can be activated by using –XX:+UseSerialGC. This collector is good for client side applications that have no strong pause constraints.

The parallel or throughput collector

The design of this GC is focused on the use of more CPU cores during garbage collection instead of leaving the other cores unused while one is doing all the garbage collection.

The young generation collector makes use of a parallel variant of the serial collector. Although it makes use of multiple cores it’s still a “stop the world” garbage collector. The use of multiple cores decreases total GC time and increased the throughput.

A collection of the old generation happens in the same way as a serial collection.

The throughput collector can be activated by using –XX:+UseParallelGC

This collector is efficient on machines with more than one CPU, but still has the disadvantage of long pause times for a full GC.

The parallel compacting collector

This GC is new since JDK 1.5 update 6 and has been added to perform old generation collections in a parallel fashion.

A young generation collection is done the same way as a young generation collection in the throughput collector.

The old generation collection is also done in a “stop the world” fashion, but is done in parallel with added sliding compaction. The collector consists out of 3 phases: mark, summary and compaction. First of all the old generation is divided into regions of fixed length. During the mark phase objects are divided among several GC threads. These threads mark all live objects. The summary phase defines the density of each region; if the density is large enough no compaction will be performed on that region. As soon as a region will be reached for which the density is low enough to do compaction (the cost of compaction is low enough), compaction will be performed on all subsequent regions based on information from the summary phase. The mark and compaction phases are parallel phases while the summary phase is implemented serial.

The parallel compacting collector can be activated by using –XX:+UseParallelOldGC

The collector is efficient on machines with more than one CPU and for applications that have higher requirements regarding pause times, since a full GC will be done in parallel.

The throughput collector can be activated by using –XX:+UseParallelGC

This collector is efficient on machines with more than one CPU, but still has the disadvantage of long pause times for a full GC.

The parallel compacting collector

This GC is new since JDK 1.5 update 6 and has been added to perform old generation collections in a parallel fashion.

A young generation collection is done the same way as a young generation collection in the throughput collector.

The old generation collection is also done in a “stop the world” fashion, but is done in parallel with added sliding compaction. The collector consists out of 3 phases: mark, summary and compaction. First of all the old generation is divided into regions of fixed length. During the mark phase objects are divided among several GC threads. These threads mark all live objects. The summary phase defines the density of each region; if the density is large enough no compaction will be performed on that region. As soon as a region will be reached for which the density is low enough to do compaction (the cost of compaction is low enough), compaction will be performed on all subsequent regions based on information from the summary phase. The mark and compaction phases are parallel phases while the summary phase is implemented serial.

The parallel compacting collector can be activated by using –XX:+UseParallelOldGC

The collector is efficient on machines with more than one CPU and for applications that have higher requirements regarding pause times, since a full GC will be done in parallel.

The focus of this GC is on reducing pause times rather than improving the throughput. Some java servers require large heap space, leading to major collections that can take a while to complete. This behavior introduces large pause times. That’s why this GC has been introduced.

The young generation collection is done the same way as a young generation collection in the throughput collector.

The biggest part of the old generation collection occurs in parallel with the application threads, resulting in shorter pause times. The CMS will start a GC before the tenured space will be full. Its goal is to perform a GC before the tenured space will have no more space left. Due to fluctuations in the load of a server the tenured space can be filled more quickly than the CMS GC can be ended. At that moment the CMS GC will stop and a serial GC will take place. The CMS has 3 major phases:

- Initial mark: the application threads will be stopped to see which objects are directly reachable from the java code.

- Concurrent mark: during this phase the GC determines which objects are still reachable from the set of the initial mark. Application threads keep on running during this phase. This makes that this phase can’t determine all reachable objects (since the application threads are still running new objects can be made). For this reason a third phase is required.

- Remark: an extra check will be performed on the set of objects from the concurrent mark phase. Applications threads will be interrupted, but this phase makes use of multiple threads.

The CMS GC doesn’t make use of compacting, resulting in less time spent during GC, but adding additional cost during object allocation.

Another disadvantage is that this GC introduces floating GC. During the concurrent mark phase application threads are still running, resulting in live objects moving to the tenured space and becoming dead objects. These dead objects can only be cleaned during the next GC. As a result this GC requires additional heap space.

The CMS can be activated by using -XX:+UseConcMarkSweepGC

The collector is efficient on machines with more than one CPU and for applications requiring low pause times rather than high throughput. It’s also possible to enable CMS for the permgen space by adding -XX:+CMSPermGenSweepingEnabled

JVM ergonomics have been introduced since JDK 5 and makes the JVM doing some kind of “self tuning”. This is partially based on the underlying platform (hardware, OS, …). Based on the platform a specific GC and heap size will be chosen automatically. On the other hand it’s possible to define a desired behavior (pause time and throughput), resulting in the JVM sizing its heap automatically to meet the desired behavior as good as possible.

Regarding the platform the JVM makes a distinction between a client and a server class machine. A server class machine is a machine with at least 2 CPU’s and +2GB RAM.

In case of a server class machine the following options will be chosen automatically:

- Throughput GC

- Min heap size = 1/64 of the available physical memory with a maximum of 1GB

- Max heap size = ¼ of the available physical memory with a maximum of 1GB

- Server runtime compiler

- The desired maximum pause time can be defined by setting -XX:MaxGCPauseMillis=<nnn> where <nnn> is the time defined in milliseconds.

- The desired throughput goal can be defined by setting -XX:GCTimeRatio=<nnn>. The ratio GC time versus application time will be defined by 1 / 1 + <nnn>. This means by setting –XX:GCTimeRatio=19 a maximum of 5% of the time will be spent on GC.

- If both the maximum pause time and the throughput goal can be met, the JVM will try to meet the footprint goal by reducing the heap size

Subscribe to:

Posts (Atom)